Research Projects

Nonverbal Interaction with Vision-Language Models

Current large language models and vision-language models rely mainly on natural language instructions, yet they do not fully make use of nonverbal information, which is an essential part of human communication. This study seeks to integrate physical expressions such as gaze, gestures, and facial expressions as input modalities for language models, aiming to create more intuitive and natural human–AI interactions. By developing core technologies that treat bodily actions themselves as input commands for AI, we open the way to communication styles beyond spoken language, while also improving the accessibility and inclusiveness of AI systems.

Large Language Models

Vision-Language Models

Human-Computer Interaction

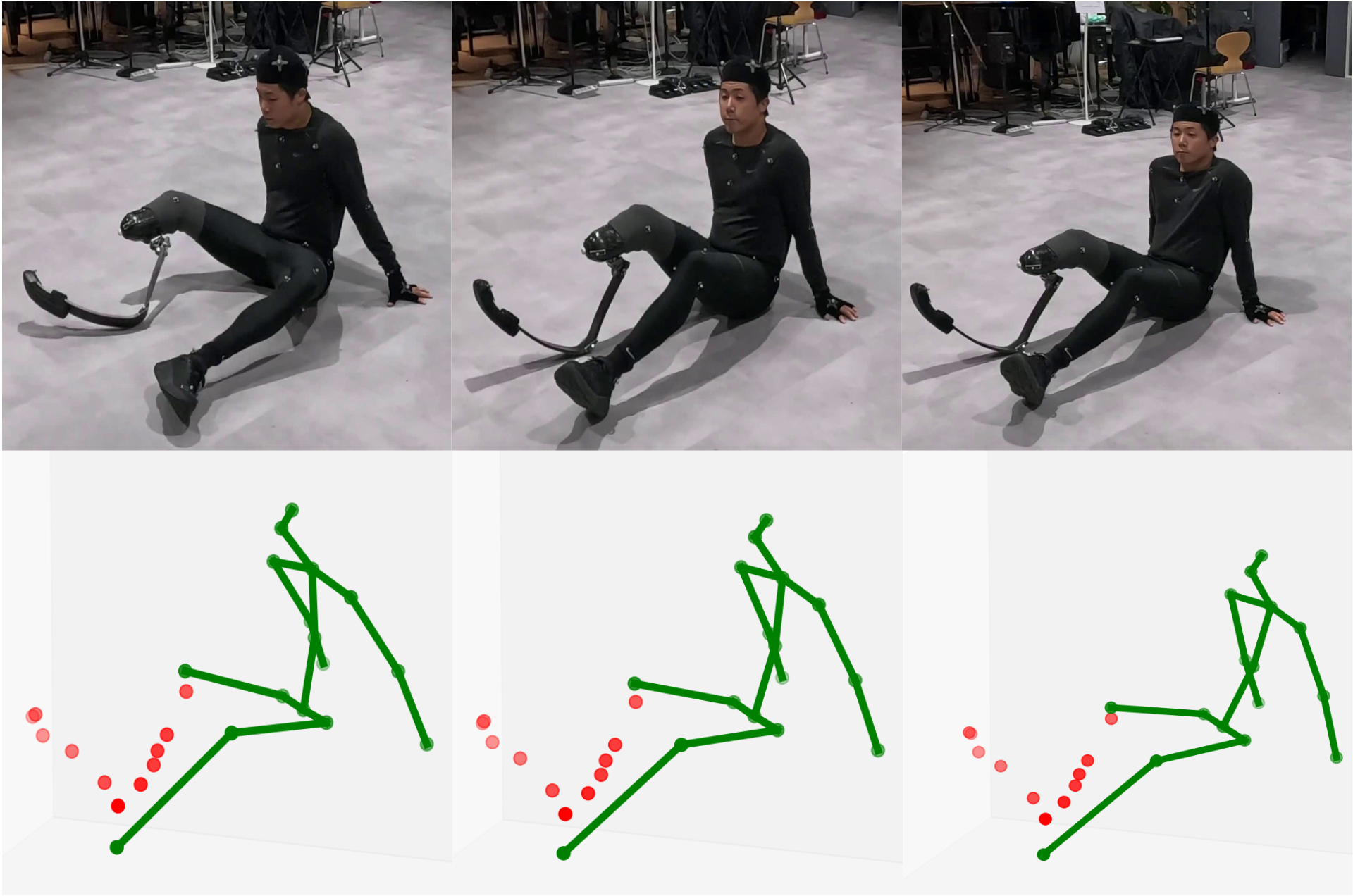

Inclusive 3D Pose Estimation for Prosthesis Users

Current pose estimation technologies are designed on the assumption of a “standard” body, which leads to the exclusion of people with diverse physical characteristics, including prosthesis users. This research addresses the essential gap between statistical machine learning models and individual diversity, aiming to develop a new pose estimation method tailored for prosthesis users. Alongside the technical development, we are building a process that values dialogue and co-creation with the people concerned, striving to realize true inclusiveness in the development of AI technologies.

Computer Vision

Participatory Design

xDiversity

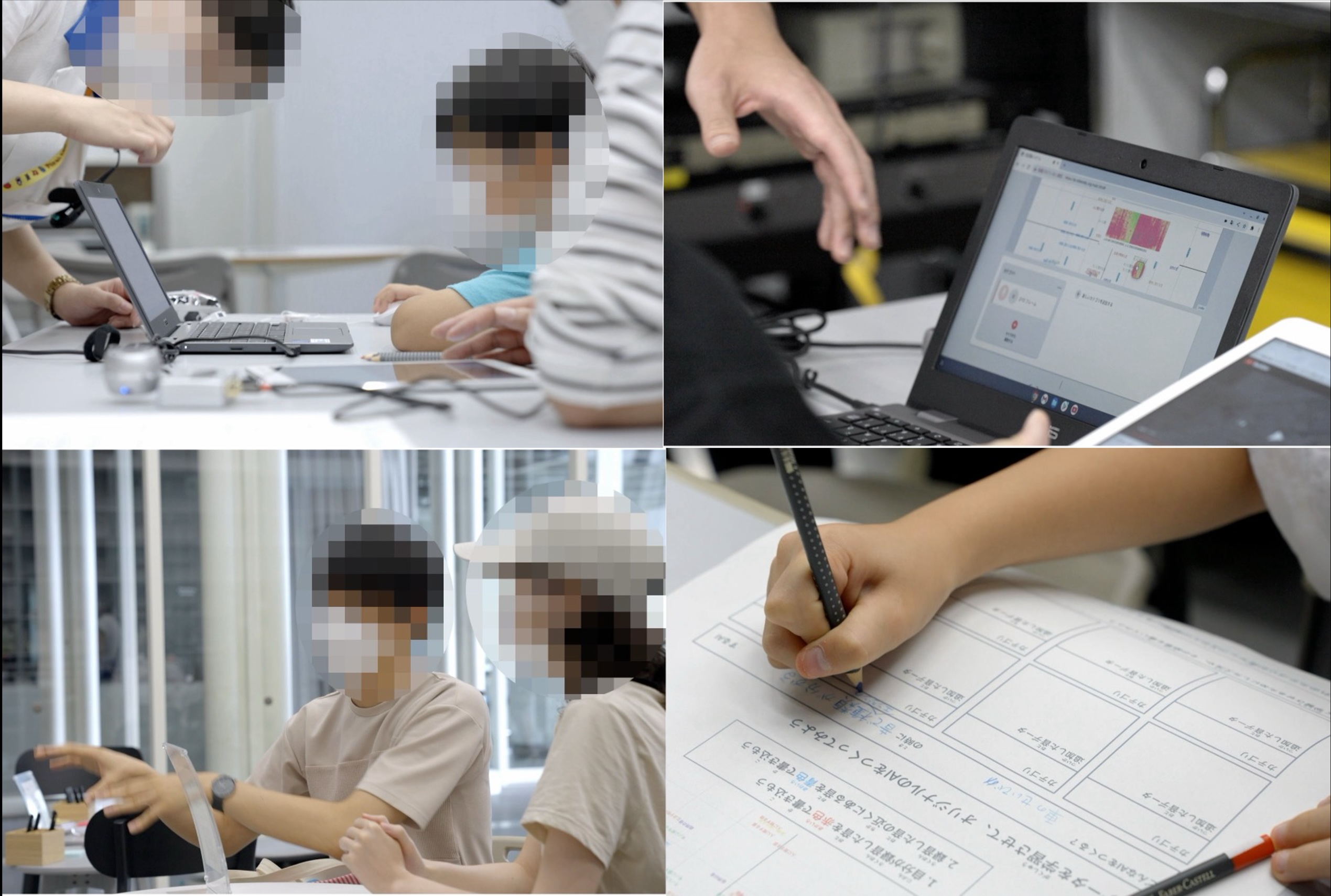

Participatory Training Data Collection through Gamification

High-quality large-scale training data is essential for improving the performance of machine learning models. However, traditional data collection methods often become repetitive and monotonous, making it difficult to sustain participants’ motivation. This study builds a framework that combines the data collection process with game elements, allowing participants to contribute valuable data while enjoying the experience. In tasks such as gaze estimation and image recognition, we gather diverse language descriptions and behavioral data through cooperative gameplay among players, while also fostering participants’ understanding of and interest in AI technologies.

Machine Learning

Participatory Design

Interactive Machine Learning for Non-Specialists

When designing systems based on machine learning, it is often not enough to simply consider the application of pre-trained models. In many cases, it is essential to provide a framework that allows users themselves to actively design their own recognition models. In our group, we address this challenge by developing systems that make machine learning accessible as a tool for general users. Through visualization methods, interface design for interactive machine learning environments, and analyses conducted in workshops, we pursue both system development and user evaluation, as well as public experiments.

Machine Learning

Human-Computer Interaction

xDiversity

Appearance-based Gaze Estimation

By recognizing where a person is looking within an environment, it becomes possible to estimate internal states related to attention and to provide flexible information according to the person’s focus. However, traditional gaze estimation methods have mainly relied on dedicated hardware, which limited their applications. We are developing a gaze estimation approach that requires only camera images as input, powered by large-scale training datasets and machine learning.

Computer Vision

Machine Learning